The advent of Large Language Models (LLMs) like GPT-4 has marked a significant milestone in the field of artificial intelligence, showcasing remarkable capabilities in processing and generating human-like text. These models excel at tasks that require rapid information retrieval, pattern recognition, and the comprehension of straightforward sentences and instructions. However, despite their impressive feats, there remains a clear distinction in the abilities of LLMs compared to human cognition, particularly in areas such as creative thinking, contextual understanding, and emotional intelligence.

One of the primary strengths of LLMs lies in their ability to quickly sift through vast amounts of data to find relevant information. This capability stems from their training on diverse datasets, enabling them to recognize patterns and correlations within the text. For example, LLMs can efficiently generate summaries, translate languages, and answer factual questions with high accuracy. Their proficiency in understanding simple sentences and following instructions also allows for applications in automated customer service, content creation, and even in educational settings to support learning.

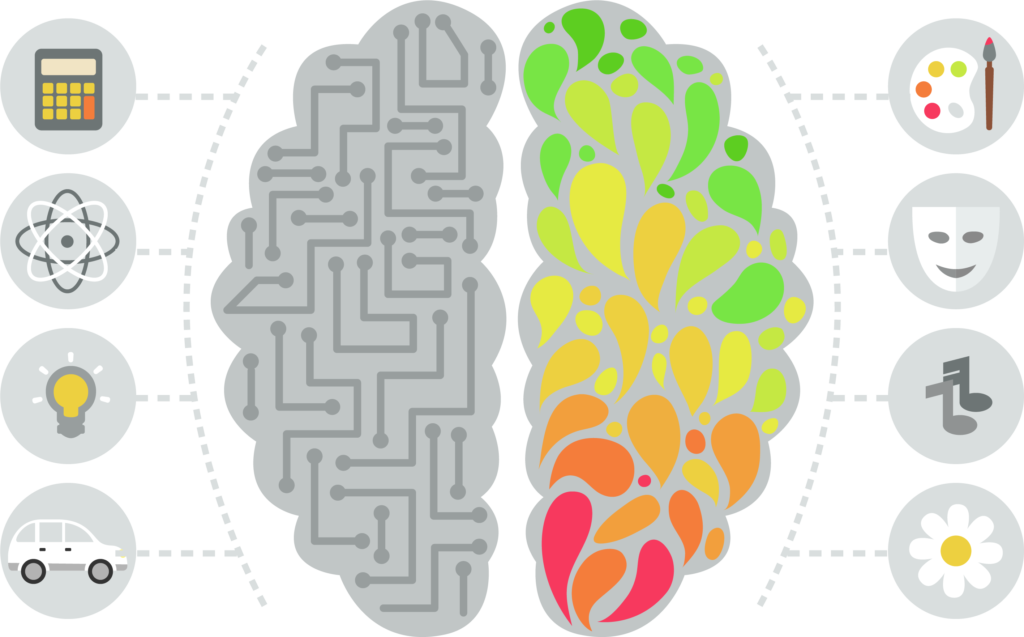

However, LLMs operate fundamentally through the manipulation of symbols and patterns they’ve encountered during training, lacking an intrinsic understanding of the content. This limitation becomes evident when comparing their output to human capabilities in creative thinking and contextual understanding. Humans, unlike LLMs, can draw upon personal experiences, emotions, and a nuanced understanding of social and cultural contexts to generate ideas, solve problems, and create art that resonates on a deeply human level. This creative process often involves connecting seemingly unrelated concepts in innovative ways, something that LLMs cannot do without direct prompts or examples to mimic.

Furthermore, the understanding and expression of emotional nuances in speech or writing highlight another distinction between LLMs and humans. Emotional intelligence involves recognizing, interpreting, and responding to the emotions of oneself and others, a complex interplay that humans navigate with the aid of social cues, historical context, and an inherent understanding of human nature. LLMs, on the other hand, can simulate empathy or emotional responses by following patterns found in their training data but do not genuinely experience or understand these emotions. Their simulated empathy is based on statistical correlations rather than genuine emotional insight, which can sometimes lead to responses that are inappropriate or lack the depth of understanding a human would naturally have.

In conclusion, while Large Language Models excel at tasks requiring rapid information retrieval, pattern recognition, and the processing of straightforward instructions, they differ significantly from humans in areas requiring creative thought, deep contextual understanding, and emotional intelligence. These distinctions underscore the complementary roles that LLMs and humans can play, with LLMs assisting in data-driven tasks and humans excelling in roles that require creativity, empathy, and deep contextual understanding.

As AI continues to evolve, understanding and leveraging these differences will be crucial in developing technologies that enhance human capabilities without attempting to replicate the irreplicable aspects of human cognition and emotional depth.